Introduction

Mail surveys are a popular mode of survey research because they allow precise targeting of small geographic areas and the ability to sample units at random through address-based sampling (Dykema et al. 2015; Link et al. 2008). However, conducting a mail survey involves costs on both the part of the researcher and the respondent. For the respondent, filling out and mailing back a survey is costly in effort, time, and memory. By compensating for these costs, incentives increase survey response rates (RRs) (Church 1993). Monetary incentives sent in advance are more effective than monetary incentives promised upon completion of the survey (Brown et al. 2018; Dykema et al. 2015; Singer and Ye 2013). Mail surveys have an advantage over phone or web surveys in this regard, because they easily allow the delivery of preincentives.

For survey researchers, the benefit provided by incentives in increased RRs must be weighed against the additional expense. While many studies have examined the influence of incentive type on RR, the cost-effectiveness of pre-paid and post-paid incentives in mail surveys of the general population has been little reviewed (James and Bolstein 1992). This study seeks to fill the gap by considering the cost-effectiveness of pre-paid incentives—which must be sent to the whole sample—compared to post-paid incentives—which are only sent for received responses. Depending on the incentive amount and corresponding RR by incentive condition, either a pre-paid or post-paid condition could be more cost-effective. In addition to conducting a cost analysis for the original study, a model is proposed for calculating the potential cost-efficiency of a generic incentive study, given variable eligibility criteria represented through factor-adjusted response rates (ARRs).

Method

The surveys were designed by a class of undergraduate research practicum students at the University of Chicago. The questionnaire included items pertaining to politics, social issues, religion, personal habits, and demographics. Randomized address-based samples of units with landlines were purchased for three adjacent neighborhoods on the southside of Chicago: Kenwood, Hyde Park, and Woodlawn. Each address received an envelope containing the survey, a pre-stamped return envelope, and a signed letter from the director of the University of Chicago Survey Lab. Within two weeks of the initial mailing, each address was mailed a reminder postcard. Surveys were sent out in November 2018, and responses were accepted until February 2019. Labor costs for each sampled case totaled $4.55.

Each address was randomly assigned to an incentive condition: a $2 pre-incentive, $10 post-incentive, or $20 post-incentive (see Table 1). The $2 pre-incentive was sent with the mail survey as a single $2 bill. The $10 and $20 post-incentives were distributed in the form of Target gift cards that were mailed to responding households. The cost to send the post-incentive totaled $1.40.

The original purpose of this research study was to measure RRs across various incentive conditions. This article extends the RR calculation to examine the cost-effectiveness per response received for the $2 pre-incentive, $10 post-incentive, and $20 post-incentive conditions as well as modeling the cost-effectiveness of these conditions for ARR.

Results

Response rates: Response rates (see Table 1) were calculated according to American Association for Public Opinion Research (AAPOR) RR2 by dividing the number of returned surveys by number of eligible cases (American Association for Public Opinion Research 2016). Eligible cases were calculated as the total number of sampled households, subtracting survey envelopes that were returned unopened, marked return-to-sender, or no-longer-resident. The overall RR was 39.7% of the eligible sample. The $2 pre-incentive condition elicited a RR of 48.5%, the highest among the three conditions. The households in the $20 post-incentive condition responded at a rate of 42.4%, while those in the $10 post-incentive condition responded at a rate of only 33.5%. While differences between the two post-incentives were modest, the $2 pre-incentive had a positive and significant effect on RRs.

Response rates were further evaluated using logistic regression. The incentive condition was the independent variable in each regression, neighborhood was controlled for, and RR was the dependent variable. A significant main effect was found for the incentive condition, Wald χ2 (2, N = 1000) = 12.17, p = 0.002. Specifically, the $10 post-incentive condition was shown to be significantly less effective than the $2 pre-incentive condition, β = -0.536, SE = 0.154, p < .001. However, the difference between the $2 pre-incentive and the $20 post-incentive was not found to be significant, β = -0.305, SE = 0.10, p = 0.11.

Cost analysis: The summary of costs across incentive conditions are listed in Table 2. Within incentive conditions, both variable costs (e.g., postage, cash incentive, labor costs) and fixed costs (e.g., project management) were included. Total costs were highest for the $10 post-incentive condition at $3,973.60 and lowest for the $2 pre-incentive condition at $1,965.00. The lowest cost-per-complete was also the $2 pre-incentive ($15.35) while the highest cost-per-complete was the $20 post-incentive ($34.40). Cost-per-complete for the pre-paid incentive condition was calculated according to Formula (1); post-paid cost-per-complete was calculated according to Formula (2).

\[C_{pre}\ = \ \frac{F + S + \ I}{RR}\ (1)\]

\[C_{post}\ = \ \frac{F + (S\ \times RR) + (I\ \times RR)}{RR}\ (2)\]

The variable C represents cost-per-complete according to whether the incentive condition is pre-paid (indexed pre) or post-paid (indexed post). The fixed costs (F) included any non-incentive related costs for each case (e.g., postage, materials, labor costs). In the study scenario, the cost to send the incentive (S) was $0 for the pre-paid incentive as it was included in the original survey mailing. In the post-paid conditions, S is adjusted according to RR, as only completed cases were sent incentives. The incentive cost (I) is a flat cost for the pre-paid condition, but is adjusted according to RR for post-paid conditions.

As well as the values specified above, Table 2 also includes E, a measure of cost-effectiveness. The ratio of incremental response rates (IRR) to incremental costs (IC), E provides a standardized comparison of the cost-efficiency of incentive conditions against a baseline (Brennan, Seymour, and Gendall 1993). For this study, the $2 pre-incentive condition was treated as the baseline group. If the ratio of E is greater than 1, then the experimental condition is more cost-effective than the baseline. Since both the $10 and $20 post-incentive conditions had lower RR than the $2 pre-incentive conditions, the E values show that both were also less cost-effective at -1.45 and -0.15, respectively.

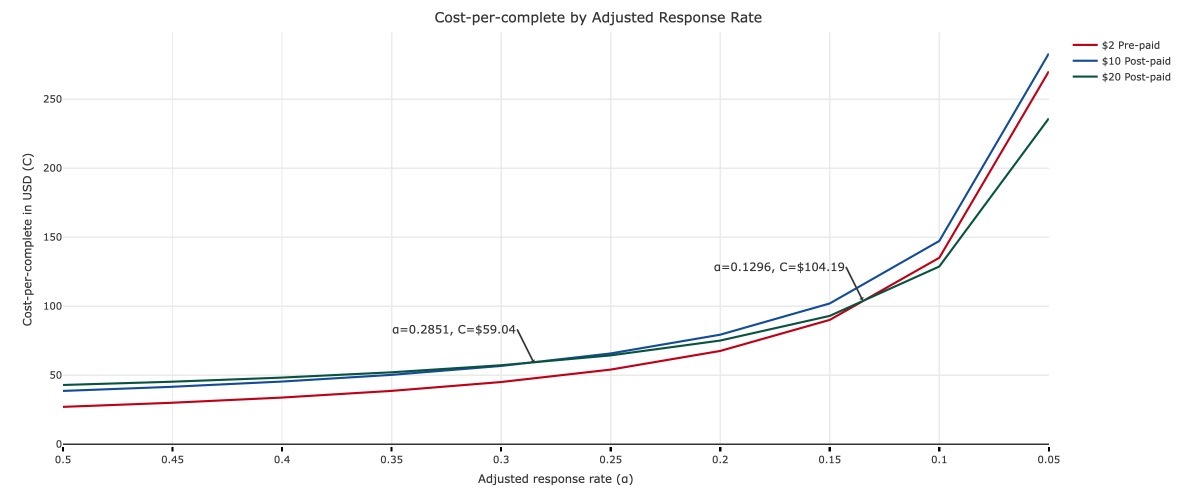

In addition, a hypothetical model was constructed to forecast cost-per-completes for each incentive condition when the overall RR is adjusted. While the original study found that the $2 pre-incentive was the most cost-effective given the RRs of each condition, the comparative cost-efficiency shifts according to the ARR. Adjusted response rate is calculated by multiplying each condition’s RR by an adjustment factor or The projected ARR at which cost-per-completes (C) for two incentive conditions become equivalent is calculated by equating the corresponding formulas (see Formula 1 and Formula 2). In this analysis, the resulting in equivalent C values for both conditions will be referred to as the equivalent adjustment factor, or (see Table 3).

Table 3 shows that the equivalent adjustment factor for the $2 pre-paid and $20 post-paid condition was calculated at 0.1296 The calculation comparing the $10 and $20 post-paid conditions found Meanwhile, the equivalence calculation for the $2 pre-paid and $10 post-paid conditions yielded As an adjustment factor, or ratio, of the RR, cannot be negative; therefore, the equivalent C value for the $2 pre-paid and $10 post-paid does not exist. Figure 1 is a visual representation of the ARR cost-per-complete model in Table 3, showing the where C values become equivalent across incentive conditions.

Discussion

Consistent with previous research, we found that the smaller $2 pre-paid incentive condition produced the highest RR, which was shown to be significantly higher than the $10 post-incentive condition. The $20 post-paid incentive condition also had a lower RR than the pre-paid incentive, but not significantly so. In addition to producing the highest RR, the $2 pre-incentive condition was the most cost-effective. Even accounting for the $2 bills sent to households that did not respond, at a cost-per-complete of $15.35, this condition was far more cost-effective than the $10 and $20 post-incentive conditions (with cost-per-completes of $26.67 and $34.40, respectively). Therefore, while both the $2 pre-incentive and $20 post-incentive produced comparable response rates, the $2 pre-incentive condition is a far more cost-effective option.

However, the cost-per-complete calculation is dependent on the RR, which could vary widely depending on survey topic, sample, length, and myriad conditions (Dillman, Smyth, and Christian 2014) besides the incentive conditions examined in this paper. Therefore, by modeling cost-per-completes according to RRs adjusted by a variable factor practitioners can determine the approximate cost-per-complete for their study design by substituting ARR for the RR in Formulas (1) and (2). In the hypothetical projection based on the current study (see Table 3), the $2 pre-paid condition remains the most cost-effective until the RR is adjusted by a factor of 0.1296, or a very low ARR of 6.29%, at which point the $20 post-paid condition has a lower cost-per-complete. However, the cost-per-complete at this point is $104.19, which is an impractically high sum for most study scenarios. On the other hand, the equivalence calculation between the two post-paid conditions shows that when the $20 post-paid RR is adjusted by a factor of 0.2851 (ARR = 12.09%), it becomes more cost-effective than the corresponding $10 post-paid condition. Thus, the higher post-paid incentive actually becomes more cost-effective at low RRs, which may be useful in a scenario where a study design is constrained to post-paid incentives.

Conclusion

The current study, which forms the basis for the cost analysis model, does have several limitations. These include the possibility of house effects and the skewed demographics of a landline sample. The survey was mailed to neighborhoods adjacent to the University of Chicago, where residents could have been influenced by the association of the survey with the university. This association was signaled through branding on the envelope label and the letter within. These house effects could have had negative or positive effects on the RR, depending on whether the recipient felt aligned or opposed to the university. In addition, the restriction of the sample to households with landlines means that the targeted households were likely established households with older residents. Our results are therefore particularly relevant for research targeting households with similar characteristics, such as those who receive Medicare or Social Security benefits.

For future studies, a larger sample size would improve the power of the design and allow more confidence to fail to reject the null for nonsignificant findings. Other potential alterations may include adding a higher pre-paid incentive condition, to allow comparison within the pre-incentive condition. Furthermore, measuring and including nonincentive factors that also influence RRs would contribute to a more robust ARR cost-per-complete model.

Author Contact

Neomi Rao

neomi.k.rao@gmail.com